Finding Lost URLs

A week or so ago, a page by Professor Solomon called The Twelve Principles made the link rounds. The prof lays out a 12-step plan for finding any lost object. Most of the principles are mental tricks to get you back to the place you lost a physical object: your keys, your glasses, your cellphone, etc.

Unfortunately, the principles don't translate well to digital objects like URLs. You didn't stick that URL for the Xbox hacking How-To in your junk drawer, and it's not likely to be stuck in the "Eureka Zone" under your keyboard. But I lose URLs all the time. I remember something I saw on the web a couple weeks ago and I can't figure out how to get there again.

I don't have anything close to a 12-principle system for finding lost URLs, but I thought it'd be fun to examine my haphazard ways of re-finding web things. These are probably obvious, but I thought collecting them together would help me start a system for finding those lost pages, blog posts, and other digital artifacts that I'd like to see again.

1. Google - As you already know, Google is great at finding things, and I can usually get back to old URLs by remembering keywords for the document. Even if I don't find exactly what I was after, I can sometimes find good substitute information on the same subject. Unfortunately, a query like "SQL Remove Duplicates" will bring up thousands of documents, and if I'm looking for a specific bit of code I found once for removing duplicate records in a database the search has to go to the next stage.

2. Browse Browser History - Ctrl-H in the browser will bring up your surfing history and it can be a lifesaver if I know I visited the URL within the last week or two. It's especially helpful if I can remember the approximate time I was visiting the page I want to find, and I sort the history by date. But because browser histories only show the domain and page title, it's not very useful if I simply remember the subject of the page. I don't think of pages in terms of the domains they're hosted on, I think in terms of the page's content. (Searching your browser cache with something like Google Desktop might be better because you can search the full text of your browsing history, but I haven't started using this regularly.)

3. Revisit Web Haunts - Chances are good that I probably found the link I'm looking for at one of the sites I read regularly. Since I follow hundreds of sites with the news reader Bloglines, this can be a big search. Unfortunately the "Search My Subscriptions" feature at Bloglines isn't working for me, so generally I'll try to narrow down which site would have had the URL and then go back in time for each site individually using the "Display items within the last x" feature. Then Ctrl-F can help me find specific keywords within past posts. Google can also come in handy here. If I know I spotted a link about SQL on O'Reilly Radar, I can use the

4. Search People - del.icio.us just rolled out a feature called your network that lets you track other del.icio.us members. There's no search yet, but you can browse back in time to see what people you know bookmarked at del.icio.us. I think this'll be handy, and I have gone back into specific people's del.icio.us archives looking for a URL. Having them all in one place is good for browsing, and saves time if I can't remember exactly who posted the link I'm looking for.

del.icio.us leads into my primary strategy for finding lost URLs: make links more findable before they're lost. Here's how I do it.

1. Use Web-based Bookmarks - I use del.icio.us (my bookmarks), but there are a bunch of web bookmark systems out there. When I come across a URL I know I'm going to want to get back to at some point, I'll click the del.icio.us bookmarklet and tag it. Searching my del.icio.us bookmarks is easy, but like your browser history, you're only searching titles, tags, and notes, not the full text of the site you bookmarked. Yahoo's My Web, and Google's Personalized Search both do better on the searching front—which leads to...

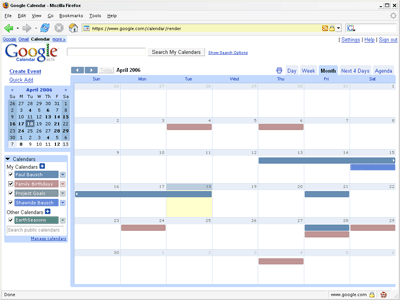

2. Turn on Search History - Privacy implications aside, I've found Google's Personalized Search handy for finding lost URLs even though I have mixed feelings about it. Once enabled, Google will remember every query you make and every search result you clicked on. You can then search just those sites that you clicked on in the past. Of course, that means everything you've searched for and every site you've clicked on is stored in a digital archive somewhere. I go back and forth, but privacy usually trumps findability for me so I might remove this option from my toolbox soon.

I should echo Professor Solomon's 13th principle: sometimes you can't find what you're after and you have to give up. The Web is ephemeral and pages come and go all the time. Even though it's maddening not to be able to get back to a document I know I've seen, that's life. What strategies am I missing?

Unfortunately, the principles don't translate well to digital objects like URLs. You didn't stick that URL for the Xbox hacking How-To in your junk drawer, and it's not likely to be stuck in the "Eureka Zone" under your keyboard. But I lose URLs all the time. I remember something I saw on the web a couple weeks ago and I can't figure out how to get there again.

I don't have anything close to a 12-principle system for finding lost URLs, but I thought it'd be fun to examine my haphazard ways of re-finding web things. These are probably obvious, but I thought collecting them together would help me start a system for finding those lost pages, blog posts, and other digital artifacts that I'd like to see again.

1. Google - As you already know, Google is great at finding things, and I can usually get back to old URLs by remembering keywords for the document. Even if I don't find exactly what I was after, I can sometimes find good substitute information on the same subject. Unfortunately, a query like "SQL Remove Duplicates" will bring up thousands of documents, and if I'm looking for a specific bit of code I found once for removing duplicate records in a database the search has to go to the next stage.

2. Browse Browser History - Ctrl-H in the browser will bring up your surfing history and it can be a lifesaver if I know I visited the URL within the last week or two. It's especially helpful if I can remember the approximate time I was visiting the page I want to find, and I sort the history by date. But because browser histories only show the domain and page title, it's not very useful if I simply remember the subject of the page. I don't think of pages in terms of the domains they're hosted on, I think in terms of the page's content. (Searching your browser cache with something like Google Desktop might be better because you can search the full text of your browsing history, but I haven't started using this regularly.)

3. Revisit Web Haunts - Chances are good that I probably found the link I'm looking for at one of the sites I read regularly. Since I follow hundreds of sites with the news reader Bloglines, this can be a big search. Unfortunately the "Search My Subscriptions" feature at Bloglines isn't working for me, so generally I'll try to narrow down which site would have had the URL and then go back in time for each site individually using the "Display items within the last x" feature. Then Ctrl-F can help me find specific keywords within past posts. Google can also come in handy here. If I know I spotted a link about SQL on O'Reilly Radar, I can use the

site: keyword like this: site:radar.oreilly.com SQL.

4. Search People - del.icio.us just rolled out a feature called your network that lets you track other del.icio.us members. There's no search yet, but you can browse back in time to see what people you know bookmarked at del.icio.us. I think this'll be handy, and I have gone back into specific people's del.icio.us archives looking for a URL. Having them all in one place is good for browsing, and saves time if I can't remember exactly who posted the link I'm looking for.

del.icio.us leads into my primary strategy for finding lost URLs: make links more findable before they're lost. Here's how I do it.

1. Use Web-based Bookmarks - I use del.icio.us (my bookmarks), but there are a bunch of web bookmark systems out there. When I come across a URL I know I'm going to want to get back to at some point, I'll click the del.icio.us bookmarklet and tag it. Searching my del.icio.us bookmarks is easy, but like your browser history, you're only searching titles, tags, and notes, not the full text of the site you bookmarked. Yahoo's My Web, and Google's Personalized Search both do better on the searching front—which leads to...

2. Turn on Search History - Privacy implications aside, I've found Google's Personalized Search handy for finding lost URLs even though I have mixed feelings about it. Once enabled, Google will remember every query you make and every search result you clicked on. You can then search just those sites that you clicked on in the past. Of course, that means everything you've searched for and every site you've clicked on is stored in a digital archive somewhere. I go back and forth, but privacy usually trumps findability for me so I might remove this option from my toolbox soon.

I should echo Professor Solomon's 13th principle: sometimes you can't find what you're after and you have to give up. The Web is ephemeral and pages come and go all the time. Even though it's maddening not to be able to get back to a document I know I've seen, that's life. What strategies am I missing?